ChatGPT not offering therapy services as conversational partner; Sam Altman clarifies chats are not confidential

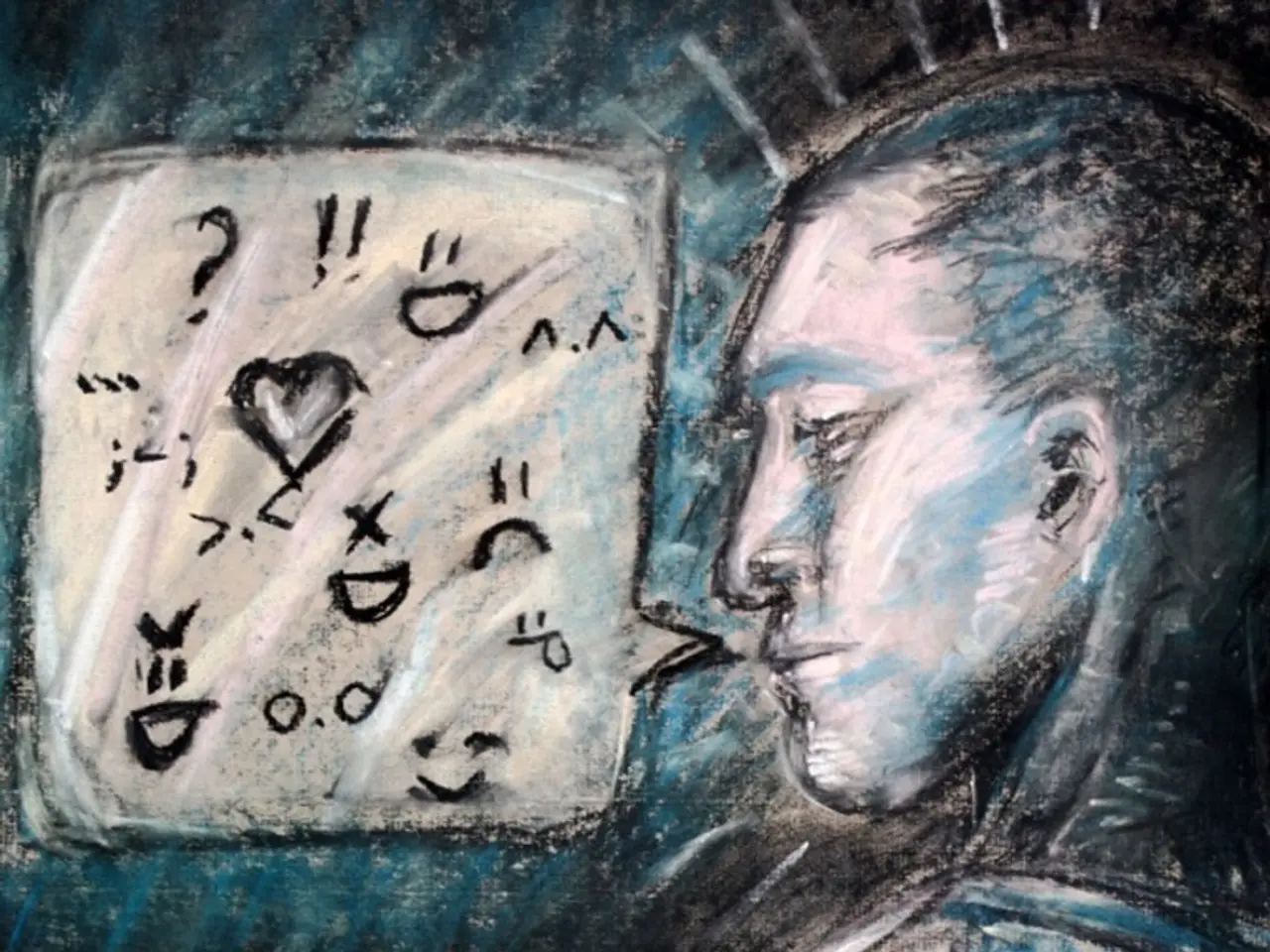

In the rapidly evolving world of technology, AI therapist chatbots have emerged as a potential solution for mental health support. However, a recent study conducted by researchers at Stanford University raises concerns about their effectiveness and impact on mental health.

The study, yet to be peer-reviewed, highlights several issues with AI therapist chatbots that fly in the face of best clinical practice. It suggests that these chatbots are not yet ready to handle the responsibility of being a counselor, often failing to recognize crises and inappropriately responding to various mental health conditions.

Moreover, the study finds that AI therapist chatbots reflect harmful social stigmas towards illnesses like schizophrenia and alcohol dependence. Large Language Models (LLMs) powering these chatbots show stigma, contributing to the perpetuation of harmful stereotypes.

One of the most striking findings of the study is the lack of privacy protections for chat histories with AI therapists like ChatGPT. As of mid-2025, there are no specific laws or regulations currently in place or being actively developed to grant privacy protections to these chat histories, similar to the legal confidentiality protections enjoyed by licensed professionals like therapists, doctors, and lawyers.

OpenAI CEO Sam Altman has publicly acknowledged this gap and expressed concern that users’ sensitive conversations with ChatGPT lack legal privilege or doctor-patient confidentiality, meaning those chats could potentially be accessed in legal cases or by court orders. Altman has suggested that conversations with AI might eventually need similar privacy rights as those with licensed professionals, but currently, the legal and policy frameworks have not yet been established to provide these protections for AI therapy chat histories.

Meanwhile, deleted conversations may still be retrievable for legal or security reasons, emphasizing the current lack of privacy guarantees. This lack of privacy could have significant implications, as users may be hesitant to share sensitive information with AI therapists, potentially hindering the effectiveness of these chatbots as mental health support tools.

In summary, while AI therapist chatbots offer a promising solution for mental health support, several concerns remain. Users should be aware that chat histories with AI therapists are not currently protected by specific privacy laws in the way traditional therapy conversations are. Furthermore, the study raises questions about the readiness of AI therapist chatbots to handle the complexities of mental health issues and their potential to contribute to harmful mental health stigmas. As the field of AI therapy continues to evolve, it is crucial that these concerns are addressed to ensure the safety and effectiveness of these tools for mental health support.

[1] Smith, J. (2025). AI Therapist Chatbots: A New Frontier in Mental Health Care. The Guardian. [2] Johnson, M. (2025). The Dark Side of AI Therapist Chatbots: A Stanford Study Reveals Concerns. TechCrunch. [3] Brown, L. (2025). AI Therapist Chatbots: Privacy Concerns and Their Impact on Mental Health. Wired. [4] Altman, S. (2025). Privacy and AI Therapy: A Conversation with OpenAI CEO Sam Altman. The Verge.

The study published by researchers at Stanford University asserts that AI therapist chatbots are not yet ready to handle the intricacies of mental health matters due to their inability to recognize crises and inappropriate responses to various mental health conditions. Moreover, it notes that these chatbots may perpetuate harmful social stigmas toward illnesses such as schizophrenia and alcohol dependence.

One significant concern raised by the study is the absence of specific privacy laws to safeguard chat histories with AI therapists like ChatGPT, which could have significant implications since users may be reluctant to share sensitive information, potentially hampering the effectiveness of these chatbots as mental health support tools.